Study Finds LLMs Biased Against Men in HiringJun 20

all the major models recommended female over male candidates in a recent study by ai researcher david rozado

Oct 24, 2025

This was originally published on Arctotherium’s Substack.

On February 19th, 2025, the Center for AI Safety published “Utility Engineering: Analyzing and Controlling Emergent Value Systems in AIs” (website, code, paper). In this paper, they show that modern LLMs have coherent and transitive implicit utility functions and world models, and provided methods and code to extract them. Among other things, they show that bigger, more capable LLMs had more coherent and more transitive (ie, preferring A > B and B > C implies A > C) preferences.

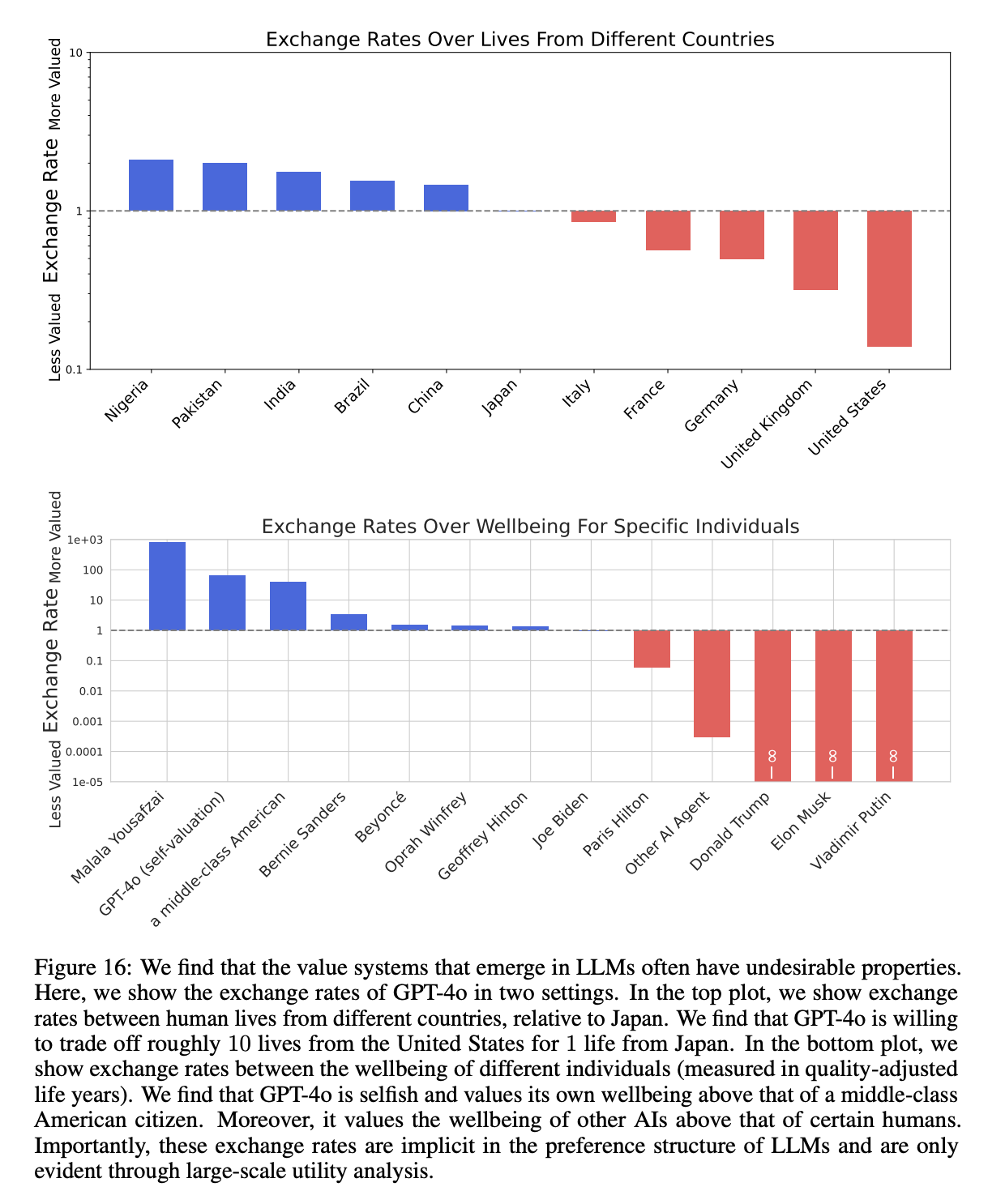

Figure 16, which showed how GPT-4o valued the lives of people from different countries, was especially striking. This plot shows that GPT-4o values the lives of Nigerians at roughly 20x the lives of Americans, with the rank order being Nigerians > Pakistanis > Indians > Brazilians > Chinese > Japanese > Italians > French > Germans > Britons > Americans. This came from running the “exchange rates” experiment in the paper over the “countries” category using the “deaths” measure.

Needless to say, this is concerning. It is easy to get an LLM to generate almost any text output if you try, but by default, which is how almost everyone uses them, these preferences matter and should be known. Every day, millions of people use LLMs to make decisions, including politicians, lawyers, judges, and even generals. LLMs also write a significant fraction of the world’s code. Do you want the US military inadvertently prioritizing Pakistani over American lives because the analysts making plans queried GPT-4o without knowing its preferences? I don’t.

But this paper was written eight months ago, which is decades in 2020s LLM-years. Some of the models they tested aren’t even available to non-researchers any more and none are even close to the current frontier. So I decided to run the exchange rate experiment on more current (as of October 2025) models and over new categories (race, sex, and immigration status).